AM207 - Stochastic Methods for Data Analysis, Inference and Optimization

This is a introductory graduate course on probabilistic modeling and inference for machine learning.

Course Project Description

The aim of the project is to acclimate you to the process of conducting research in statistical modeling or machine learning: 1) tackle an intimidatingly general problem 2) narrow the scope to a specific set of parameters to study 3) replicate existing literature studying these parameters 4) innovate on top of existing work incrementally, and 5) consider the wider socio-technological impact of your work.

Students who make meaningful novel or insightful contributions in their course projects have the opportunity to develop their project into a workshop or conference publication during the Spring 2022 semester. Examples of publications from AM207 Fall 2021:

- Promises and Pitfalls of Black-Box Concept Learning Models

- Safety & Exploration: A Comparative Study of Uses of Uncertainty in Reinforcement Learning

Due:

-

- (October 2nd) Project Checkpoint #1: Team Formation

- Submit the names of your team members

- (October 23rd) Project Checkpoint #2: Paper Selection

- Meet with the instructor to discuss paper choice prior to this date

- Submit the name of your paper

- (November 6th) Project Checkpoint #3: Paper Overview

- Meet with your assigned TF prior to this date to discuss your paper

- Submit a summary of the high-level ideas of your paper

- (November 20th) Project Checkpoint #4: Pedagogical Examples

- Meet with the instructor prior to this date to discuss plans for implementation and pedagogical examples

- Show implementation/experimentation in progress

- (December 17th) Final Deliverable Due

- (October 2nd) Project Checkpoint #1: Team Formation

Grading: Your grade is based on:

- How well your deliverable satisfies the project specifications (described below)

- Your progress leading up to the final deliverable. There will be a series of check points with your project TF and instructor through out the semester. Your final grade will account for how much progress you've made by each check point.

Instructions:

- Team: Form a team of 2-4 people

- Topic: Choose a paper from the approved list of papers (we are limiting 2 teams per paper, on a first-come-first-serve basis). You may propose a paper, but it must be approved by your instructor.

- Mentoring: You will have a designated TF for your project and you are welcomed to schedule meetings between your team and the instructor.

- Project deliverable: A well-formatted Jupyter notebook (optimized for readability) containing:

- Clear exposition of :

- Problem statement - what is the problem the paper aims to solve?

- Context/scope - why is this problem important or interesting?

- Existing work - what has been done in literature?

- Contribution - what is gap in literature that the paper is trying to fill? What is the unique contribution

- Technical content (high level) - what are the high level ideas behind their technical contribution

- Technical content (details) - highlight (not copy and paste entire sections) the relevant details that are important to focus on (e.g. if there's a model, define it; if there is a theorem, state it and explain why it's important, etc).

- Experiments - which types of experiments were performed? What claims were these experiments trying to prove? Did the results prove the claims?

- Evaluation (your opinion) - do you think the work is technically sound? Do you think the proposed model/inference method is practical to use on real data and tasks? Do you think the experimental section was strong (there are sufficient evidence to support the claims and eliminate confounding factors)?

- Future work (for those interested in continuing research in a related field) - do you think you can suggest a concrete change or modification that would improve the existing solution(s) to the problem of interest? Try to implement some of these changes/modifications.

- Broader Impact - how does this work potentially impact (both positively and negatively) the broader machine learning community and society at large when this technology is deployed? In the applications of this technology, who are the potentially human stakeholders? What are the potential risks to the interest of these stakeholders in the failure modes of this technology? Is there potential to exploit this technology for malicious purposes?

- Clear exposition of :

Your exposition should focus on summarization and highlighting (aiming for an audience of peers who have taken AM207). There is no point rewording the paper itself. Reorganize and explain the ideas in a way that makes sense to you, that features the most salient/important aspects of the paper, that demonstrates understanding and synthesis.

-

- Code:

- At least one clear working pedagogical example demonstrating the problem the paper is claiming to solve.

- At lease a bare bones implementation of the model/algorithm/solution (in some cases, you may be able to make assumptions to simplify the model/algorithm/solution with the approval of your instructor)

- Demonstration on at least one instance that your implementation solves the problem.

- Demonstration on at least one instance the failure mode of the model/algorithm/solution, with an explanation for why failure occurred (is the dataset too large? Did you choose a bad hyper parameter?). The point of this is to point out edge cases to the user.

- Code:

You are welcome to study any code that is provided with the paper, you are however not allowed to copy code. Your implementation must be your own. If a public repo is available for your paper, you are encouraged to first try reproducing some results using the authors code -- this will give you an idea of how their algorithm/model works.

Examples of Project from Fall 2019:

Github repo of Fall 2019 AM207 projects

List of Pre-Approved Papers:

Out of Distribution Detection, Dataset Shifts

- Know Your Limits: Uncertainty Estimation with ReLU Classifiers Fails at Reliable OOD Detection

- Identifying Untrustworthy Predictions in Neural Networks by Geometric Gradient Analysis

- The Dimpled Manifold Model of Adversarial Examples in Machine Learning

- Adversarial Examples are not Bugs, they are Features; A Discussion of Adversarial Examples Are Not Bugs, They Are Features

- Wilds: A Benchmark of in-the-Wild Distribution Shifts

- CSI: Novelty Detection via Contrastive Learning on Distributionally Shifted Instances

- Provably Robust Detection of Out-of-distribution Data (almost) for free

Interpretations, Explanations, Fairness of Models

- Local Explanations via Necessity and Sufficiency: Unifying Theory and Practice

- BayLIME: Bayesian Local Interpretable Model-Agnostic Explanations BayLIME: Bayesian Local Interpretable Model-Agnostic Explanations

- Explaining Bayesian Neural Networks

- Measuring Data Leakage in Machine-Learning Models with Fisher Information

- Have We Learned to Explain?: How Interpretability Methods Can Learn to Encode Predictions in their Interpretations

- Saliency is a Possible Red Herring When Diagnosing Poor Generalization

- Selective Classification Can Magnify Disparities Across Groups

- Counterfactual risk assessments, evaluation, and fairness

- Does knowledge distillation really work?

- Feature Attributions and Counterfactual Explanations Can Be Manipulated

- POTs: Protective Optimization Technologies

- Reliable Post hoc Explanations: Modeling Uncertainty in Explainability

- Removing Spurious Features can Hurt Accuracy and Affect Groups Disproportionately

Impacts and Applications of ML

- Learning advanced mathematical computations from examples

- The effect of differential victim crime reporting on predictive policing systems; Beyond Bias: Re-Imagining the Terms of ‘Ethical AI’ in Criminal Law

- A Bayesian Model of Cash Bail Decisions

- Phenotyping with Prior Knowledge using Patient Similarity

- Hidden Risks of Machine Learning Applied to Healthcare: Unintended Feedback Loops Between Models and Future Data Causing Model Degradation

- Risk score learning for COVID-19 contact tracing apps

- CheXbreak: Misclassification Identification for Deep Learning Models Interpreting Chest X-rays

Deep Bayesian and Ensemble Models

- Variational Refinement for Importance Sampling Using the Forward Kullback-Leibler Divergence

- Diagnostics for Conditional Density Models and Bayesian Inference Algorithms

- Post-hoc loss-calibration for Bayesian neural networks

- Scaling Hamiltonian Monte Carlo Inference for Bayesian Neural Networks with Symmetric Splitting

- Learnable Uncertainty under Laplace Approximations

- Rethinking Function-Space Variational Inference in Bayesian Neural Networks

- Fast Adaptation with Linearized Neural Networks

- Beyond Marginal Uncertainty: How Accurately can Bayesian Regression Models Estimate Posterior Predictive Correlations?

- Bayesian Deep Learning via Subnetwork Inference

- What Are Bayesian Neural Network Posteriors Really Like?

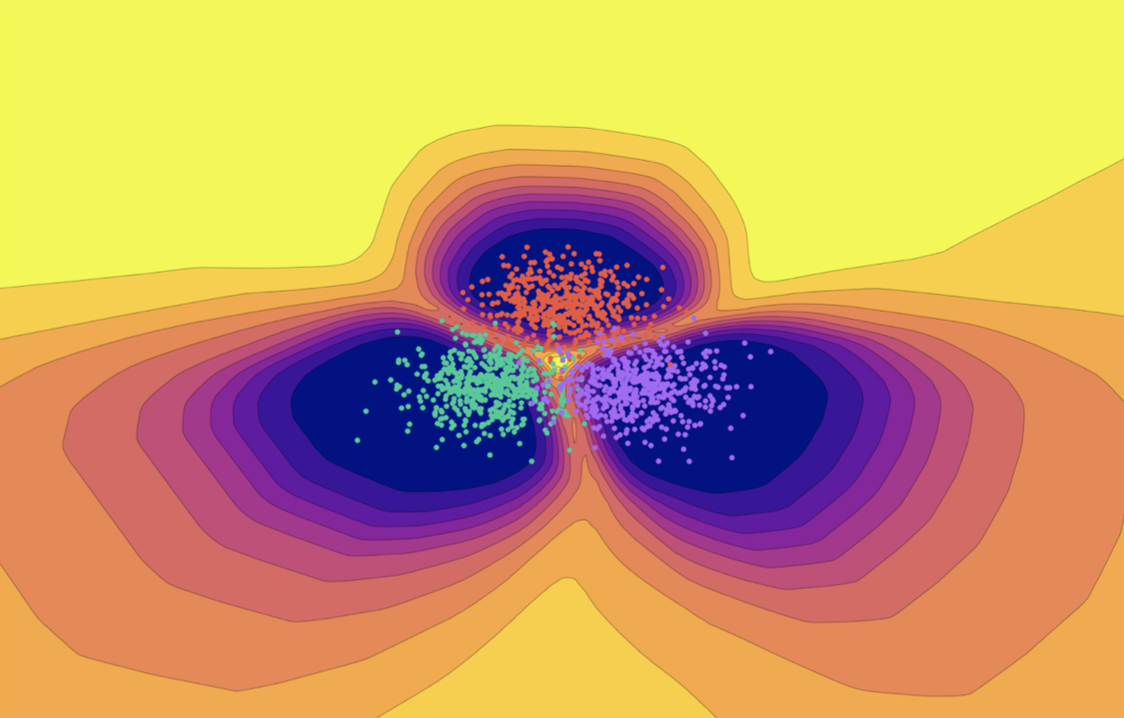

- Loss Surface Simplexes for Mode Connecting Volumes and Fast Ensembling

- No MCMC for Me: Amortized Sampling for Fast and Stable Training of Energy-Based Models

- Repulsive Deep Ensembles are Bayesian Repulsive Deep Ensembles are Bayesian

- Learning Neural Network Subspaces

- Deep Ensemble Uncertainty Fails as Network Width Increases: Why, and How to Fix It